Report: Weaponised AI Is Powering the Fifth Wave of Cybercrime

Group-IB has released its inaugural white paper, ‘Weaponized AI: Inside the Criminal Ecosystem Fueling the Fifth Wave of Cybercrime,’ which explores how AI is transforming and accelerating malicious activity within the cybercrime landscape.

Over the past 30 years, cybercrime has evolved through successive waves, from manual phishing in the late 90s to industrialised ransomware, and on to supply chain and ecosystem attacks that characterised the early 2020s. Group-IB has found a 371% surge in dark web forum posts featuring AI keywords since 2019, and a ten-fold increase in replies (1199%). Now, adversaries are industrialising AI, turning once specialist skills such as persuasion, impersonation, and malware development into on-demand services available to anyone with a credit card.

The fifth wave of cybercrime

Group-IB’s 2025 infiltration of dark web forums and underground marketplaces found that AI abuse dominated dark web discussions, with 23,621 first posts and 298,231 replies. Interest peaked in 2023, following the release of ChatGPT to the general public in late 2022, with over 300,000 replies to AI posts, coinciding with the release of GPT-4 and rising regulatory/societal concerns.

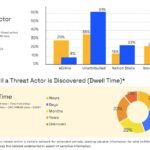

Unlike earlier waves of cybercrime, AI adoption by threat actors has been strikingly fast. AI is now firmly embedded as core infrastructure throughout the criminal ecosystem rather than an occasional exploit.

Crimeware accessible for the cost of a monthly streaming subscription

Group-IB investigations suggest that a few distinct seller types routinely market and package AI crimeware to lower-skilled buyers on underground markets, making sophisticated attacks accessible to novices. Vendors are mimicking aspects of legitimate SaaS businesses, from pricing tiers and subscription models to customer service support.

AI crimeware typically falls into three main categories: LLM exploitation, phishing and social engineering automation, and malware and tooling. These dark web offerings are affordable and often bundled together to make them more attractive to potential buyers.

The operating systems of modern cybercrime

Dark LLM?s: Threat actors are moving past chatbot misuse and are creating proprietary Dark LLMs that are more stable, capable, and have no ethical restrictions. Group-IB identifies at least three active vendors offering Dark LLMs, with subscription prices ranging from $30 to $200 per month and a customer base exceeding 1,000 users.

Jailbreak framework services and instructions: Jailbreaking enables legitimate LLMs to output disallowed, unsafe, or malicious content by leveraging reusable templates or instructions to bypass guardrails. Group-IB found that by the end of Q3 2025, the volume of these posts almost equaled the total volume for 2024, with a focus predominantly on ChatGPT and OpenAI models.

Deepfake-as-a-service: Group-IB’s monitoring of dark web forums shows a thriving marketplace for synthetic identity kits offering AI video actors, cloned voices, and even biometric datasets for as little as $5 USD. Group-IB analysts detected and exported 300+ dark web posts from 2022 to September 2025 referencing deepfake and KYC, with 2025 seeing a 52% increase in unique usernames. Attackers are harvesting samples with as little as 10 seconds of audio from social media, webinars or even past phone calls to create convincing clones.

Craig Jones, Former INTERPOL Director of Cybercrime and Independent Strategic Advisor, said: AI has industrialized cybercrime. What once required skilled operators and time can now be bought, automated, and scaled globally. While AI hasn’t created new motives for cybercriminals, money, leverage, and access still drive the ecosystem; it has dramatically increased the speed, scale, and sophistication with which those motives are pursued. The shift marks a new era, where speed, volume, and sophisticated impersonation have fundamentally changed how crime is committed and how hard it is to stop.

How defenders are mobilizing against weaponized AI

Weaponized AI is a global challenge that no single organization or regulator can tackle in isolation. Unlike traditional malware, AI-enabled attacks leave little forensic trace, making detection and attribution harder. For defenders, this landscape demands urgent adaptation.

Group-IB’s research underscores the need for intelligence-led security strategies that place adversary behavior at the center, combining predictive threat intelligence, fraud prevention, and deep visibility into underground ecosystems. Cross-border collaboration between the private sector, law enforcement, and regulators will be essential to counter this evolving threat.

Dmitry Volkov, CEO of Group-IB, adds: From the frontlines of cybercrime, AI is giving criminals unprecedented reach. Today, AI is enabling criminals to scale scams with ease and create hyper-personalisation and social engineering to a new standard. In the near future, autonomous AI will carry out attacks that once required human expertise. Understanding this shift is essential to stopping the next generation of threats and ensuring defenders outpace attackers, moving towards an intelligence-led security strategy that combines AI-driven detection, fraud prevention, and deep visibility into underground criminal ecosystems.